In fall 2015 we made an ”emerging tech” bet on VR and chose a “swing for the fence” scale risk-reward approach. We believed VR would rapidly emerge as a very large-scale industry based on anecdotal buzz and our own profound amazement at early trials of the 6-DOF systems floating about Seattle via Valve’s early-access demonstrations.

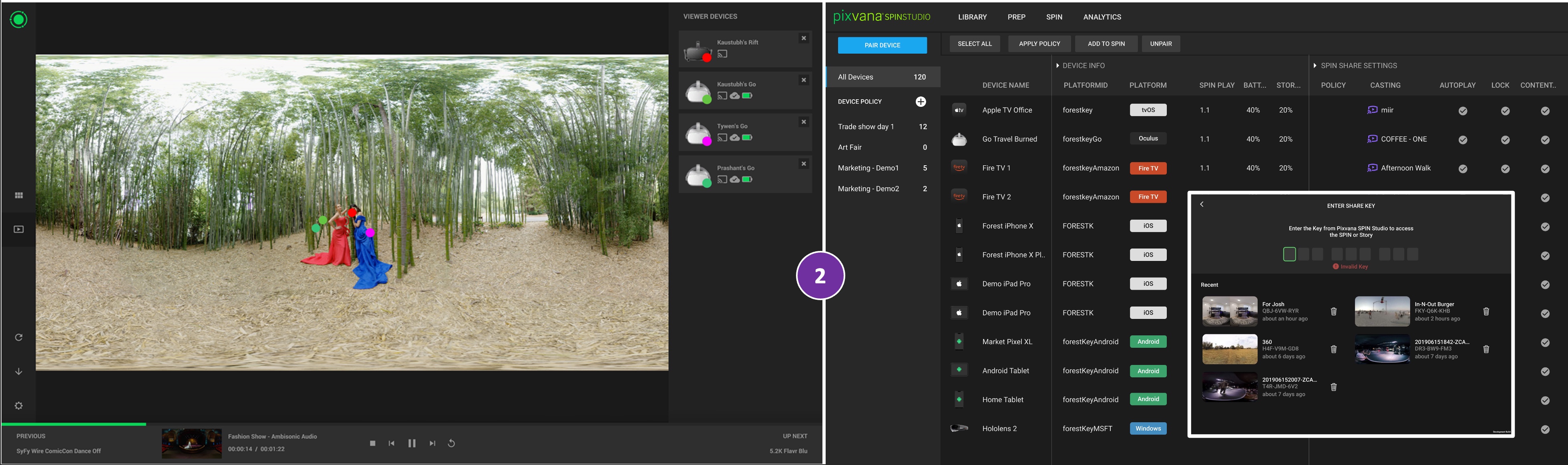

I’ve been a founder of several businesses and by my count worked on ~15 v1.0 software products at both startups and large co’s. Pixvana’s SPIN Studio platform far and away exceeded anything else I’ve ever been involved with in terms of system design, technical innovation, and the potential to be of large commercial consequence for decades. Alas, the work also scores as the most catastrophically irrelevant (measured by adoption by end-users we achieved) of my career.

Voodle by comparison was a practical, pragmatic application that required very little technical innovation or real change in users’ expectations, but it did come on the scene at a time of “app saturation” when we were welcomed by a market with quite a bit of app-adoption-friction. We executed well-enough, but failed to find product-market-fit.

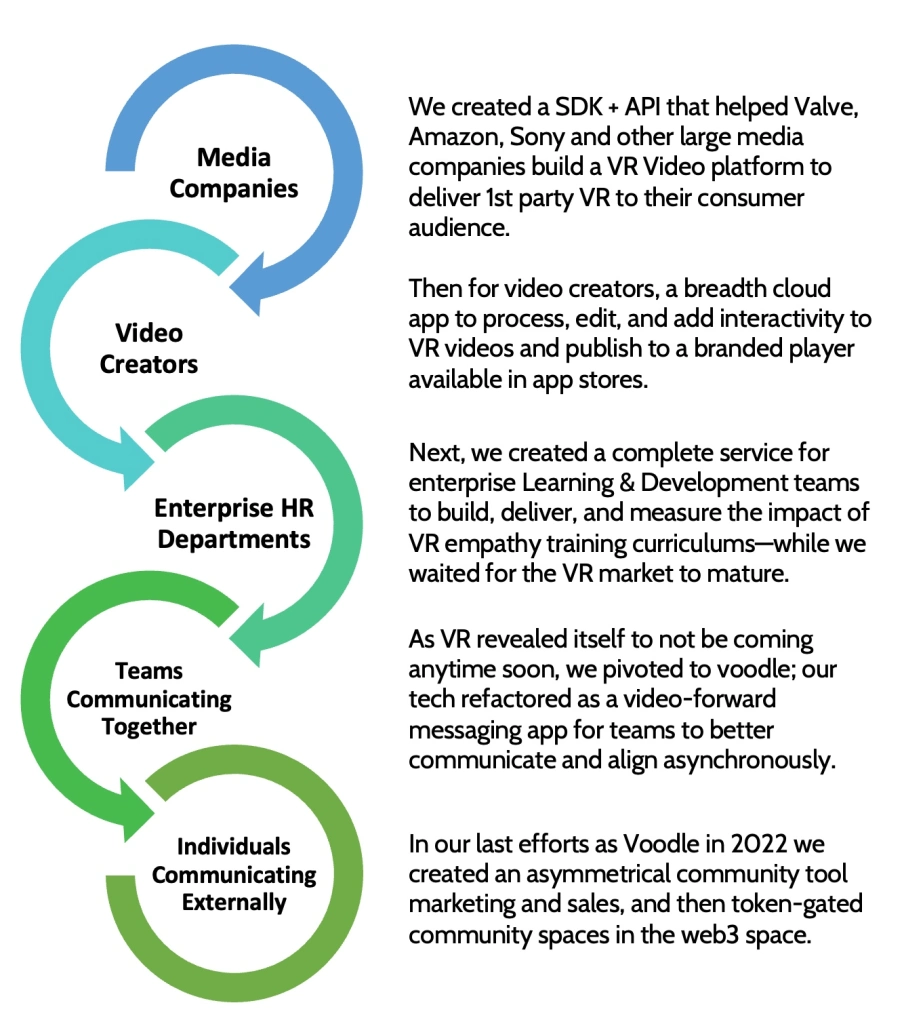

Over the last 7 years our approach evolved and ultimately meandered as we shipped a series of interesting tools that scored as not-quite-right for customers. We started with large media companies and followed with makers; pivoted to enterprise learning orgs, to individuals on teams, and ended up in last efforts with “one-to-many” affinity communities. From VR, to mobile selfie video-messaging and of late to web3 and utility for NFTs in community.

All of us that worked on the projects are incredibly disappointed. Hard work, good execution, dogged perseverance – these are table-stakes. Timing and luck are also brutally critical ingredients. We aspired to delight customers. We didn’t. I’m chagrined that we pursued such a wide set of interesting technologies in search of problems to solve—a cardinal sin.

To our shareholders and advisors Thank You for your support of me and the team with your trust, mentorship, and capital. To my colleagues, we did a lot of great work and I know we all take our experience together forward into new chapters to come in our lives.

— Forest Key, Dec 2022

The last 7 years touched the lives of many team members who worked together. For many Pixvana + Voodle were a first job right out of college, and for a few it was their formal job before retirement. From an office in Seattle, we evolved into a remote team in 8 states in our pajamas. We collaborated with passion, and experienced disappointments and achievements.